Events to DataFrame¶

ObsPlus provides a way to extract useful summary information from ObsPy objects in order to create dataframes. This transformation is lossy but very useful when the full complexity of the Catalog object isn’t warranted. By default the events_to_df function collects information the authors of ObsPlus have found useful, but it is fully extensible/customizable using the functionality of the DataFrameExtractor.

To demonstrate how the Catalog can be flattened into a table, let’s again use the Crandall catalog.

[1]:

from matplotlib import pyplot as plt

import obsplus

[2]:

crandall = obsplus.load_dataset("crandall_test")

cat = crandall.event_client.get_events()

ev_df = obsplus.events_to_df(cat)

ev_df.head()

[2]:

| time | latitude | longitude | depth | magnitude | event_description | associated_phase_count | azimuthal_gap | event_id | horizontal_uncertainty | ... | standard_error | used_phase_count | station_count | vertical_uncertainty | updated | author | agency_id | creation_time | version | stations | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2007-08-07 07:13:05.760 | 39.4605 | -111.2242 | 3240.0 | 2.24 | 0.0 | NaN | smi:local/248891 | NaN | ... | 0.9901 | 0.0 | 35.0 | NaN | 2012-04-12 10:19:26.228029952 | NaT | DUG, L15A, M14A, M15A, M16A, N14A, N15A, N16A,... | ||||

| 1 | 2007-08-07 02:14:24.080 | 39.4632 | -111.2230 | 4180.0 | 1.26 | LR | 0.0 | NaN | smi:local/248883 | NaN | ... | 0.8834 | 0.0 | 14.0 | NaN | 2018-10-10 21:10:26.864045056 | DC | NIOSH | 2018-10-10 21:10:26.864045 | DUG, P14A, P15A, P16A, P18A, Q14A, Q16A, Q18A,... | |

| 2 | 2007-08-07 03:44:18.470 | 39.4625 | -111.2152 | 4160.0 | 1.45 | LR | 0.0 | NaN | smi:local/248887 | NaN | ... | 0.5716 | 0.0 | 15.0 | NaN | 2018-10-10 21:10:27.576204032 | DC | NIOSH | 2018-10-10 21:10:27.576204 | DUG, N17A, P14A, P16A, P18A, Q14A, Q16A, Q18A,... | |

| 3 | 2007-08-07 21:42:51.130 | 39.4627 | -111.2200 | 4620.0 | 1.65 | 0.0 | NaN | smi:local/248925 | NaN | ... | 0.5704 | 0.0 | 19.0 | NaN | 2018-10-11 22:08:54.236915968 | DC | NIOSH | 2018-10-11 22:08:54.236916 | M15A, O15A, P14A, P15A, P16A, P17A, P18A, Q14A... | ||

| 4 | 2007-08-07 02:05:04.490 | 39.4648 | -111.2255 | 1790.0 | 2.08 | LR | 0.0 | NaN | smi:local/248882 | NaN | ... | 0.9935 | 0.0 | 35.0 | NaN | 2018-10-10 21:15:19.190404096 | DC | NIOSH | 2018-10-10 21:15:19.190404 | DUG, L15A, M14A, M15A, N14A, N15A, N16A, N17A,... |

5 rows × 28 columns

events_to_df can also operate on other event_clients, like the EventBank.

[3]:

bank = crandall.event_client

ev_df2 = obsplus.events_to_df(bank)

ev_df2.head()

[3]:

| time | latitude | longitude | depth | magnitude | event_description | associated_phase_count | azimuthal_gap | event_id | horizontal_uncertainty | ... | standard_error | used_phase_count | station_count | vertical_uncertainty | updated | author | agency_id | creation_time | version | stations | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2007-08-07 07:13:05.760 | 39.4605 | -111.2242 | 3240.0 | 2.24 | 0.0 | NaN | smi:local/248891 | NaN | ... | 0.9901 | 0.0 | 35.0 | NaN | 2012-04-12 10:19:26.228029952 | NaT | DUG, L15A, M14A, M15A, M16A, N14A, N15A, N16A,... | ||||

| 1 | 2007-08-07 02:14:24.080 | 39.4632 | -111.2230 | 4180.0 | 1.26 | LR | 0.0 | NaN | smi:local/248883 | NaN | ... | 0.8834 | 0.0 | 14.0 | NaN | 2018-10-10 21:10:26.864045056 | DC | NIOSH | 2018-10-10 21:10:26.864045 | DUG, P14A, P15A, P16A, P18A, Q14A, Q16A, Q18A,... | |

| 2 | 2007-08-07 03:44:18.470 | 39.4625 | -111.2152 | 4160.0 | 1.45 | LR | 0.0 | NaN | smi:local/248887 | NaN | ... | 0.5716 | 0.0 | 15.0 | NaN | 2018-10-10 21:10:27.576204032 | DC | NIOSH | 2018-10-10 21:10:27.576204 | DUG, N17A, P14A, P16A, P18A, Q14A, Q16A, Q18A,... | |

| 3 | 2007-08-07 21:42:51.130 | 39.4627 | -111.2200 | 4620.0 | 1.65 | 0.0 | NaN | smi:local/248925 | NaN | ... | 0.5704 | 0.0 | 19.0 | NaN | 2018-10-11 22:08:54.236915968 | DC | NIOSH | 2018-10-11 22:08:54.236916 | M15A, O15A, P14A, P15A, P16A, P17A, P18A, Q14A... | ||

| 4 | 2007-08-07 02:05:04.490 | 39.4648 | -111.2255 | 1790.0 | 2.08 | LR | 0.0 | NaN | smi:local/248882 | NaN | ... | 0.9935 | 0.0 | 35.0 | NaN | 2018-10-10 21:15:19.190404096 | DC | NIOSH | 2018-10-10 21:15:19.190404 | DUG, L15A, M14A, M15A, N14A, N15A, N16A, N17A,... |

5 rows × 28 columns

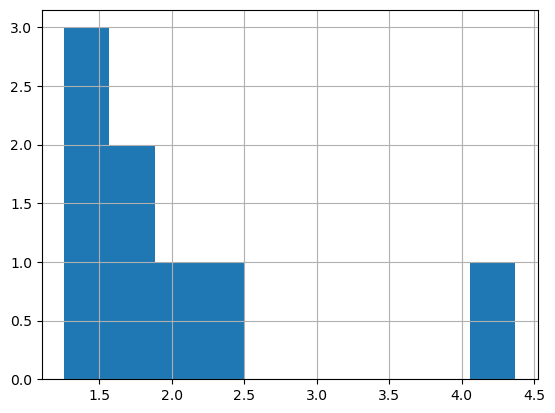

Now we have access to all the wonderful Pandas magics. Here are a few contrived examples of things we may want to do:

[4]:

# plot a histogram of magnitudes

ev_df.magnitude.hist()

plt.show()

Since there aren’t a lot of events let’s look at the picks to make things slightly more interesting:

[5]:

# get pick info into a dataframe

picks = obsplus.picks_to_df(cat)

[6]:

# count the types of phase picks made on all events

picks.phase_hint.value_counts()

[6]:

phase_hint

P 384

S 85

Pb 72

Sb 40

? 33

Name: count, dtype: int64

[7]:

# calculate the max pick_time for each event

picks.groupby("event_id")["time"].max()

[7]:

event_id

smi:local/248828 2007-08-06 01:46:30.424280

smi:local/248839 2007-08-06 08:50:18.697470

smi:local/248843 2007-08-06 10:49:02.491970

smi:local/248882 2007-08-07 02:06:40.963320

smi:local/248883 2007-08-07 02:15:24.573490

smi:local/248887 2007-08-07 03:45:22.084660

smi:local/248891 2007-08-07 07:14:24.078630

smi:local/248925 2007-08-07 21:43:54.936950

Name: time, dtype: datetime64[ns]

The example below demonstrates how to calculate travel time stats grouped by stations on stations with at least 3 P picks. Since all the events are coming from roughly the same location (within a few km) we might look for stations that have high standard deviations or obvious outliers as on the the first steps in quality control.

[8]:

# get only P picks

df = picks[picks.phase_hint.str.upper() == "P"]

# add columns for travel time

df["travel_time"] = df["time"] - df["event_time"]

# filter out stations that aren't used at least 3 times

station_count = df["station"].value_counts()

stations_with_three = station_count[station_count > 2]

# only include picks that are used on at least 3 stations

df = df[df.station.isin(stations_with_three.index)]

# get stats of travel times

df.groupby("station")["travel_time"].describe()

[8]:

| count | mean | std | min | 25% | 50% | 75% | max | |

|---|---|---|---|---|---|---|---|---|

| station | ||||||||

| DUG | 6 | 0 days 00:00:26.450391666 | 0 days 00:00:00.271624748 | 0 days 00:00:26.071740 | 0 days 00:00:26.329110 | 0 days 00:00:26.405940 | 0 days 00:00:26.613517500 | 0 days 00:00:26.832520 |

| L15A | 4 | 0 days 00:00:44.281470 | 0 days 00:00:00.395213333 | 0 days 00:00:43.904210 | 0 days 00:00:43.958120 | 0 days 00:00:44.299440 | 0 days 00:00:44.622790 | 0 days 00:00:44.622790 |

| M14A | 3 | 0 days 00:00:42.733800 | 0 days 00:00:00.232198731 | 0 days 00:00:42.465680 | 0 days 00:00:42.666770 | 0 days 00:00:42.867860 | 0 days 00:00:42.867860 | 0 days 00:00:42.867860 |

| M15A | 6 | 0 days 00:00:37.389573333 | 0 days 00:00:00.395734547 | 0 days 00:00:36.924550 | 0 days 00:00:37.155292500 | 0 days 00:00:37.276860 | 0 days 00:00:37.723365 | 0 days 00:00:37.872200 |

| N14A | 4 | 0 days 00:00:34.753022500 | 0 days 00:00:00.309191321 | 0 days 00:00:34.382170 | 0 days 00:00:34.556087500 | 0 days 00:00:34.810995 | 0 days 00:00:35.007930 | 0 days 00:00:35.007930 |

| N15A | 4 | 0 days 00:00:30.787567500 | 0 days 00:00:00.678323974 | 0 days 00:00:30.187990 | 0 days 00:00:30.206282500 | 0 days 00:00:30.793665 | 0 days 00:00:31.374950 | 0 days 00:00:31.374950 |

| N16A | 3 | 0 days 00:00:27.035340 | 0 days 00:00:00.319788540 | 0 days 00:00:26.666080 | 0 days 00:00:26.943025 | 0 days 00:00:27.219970 | 0 days 00:00:27.219970 | 0 days 00:00:27.219970 |

| N17A | 3 | 0 days 00:00:28.347476666 | 0 days 00:00:00.111948217 | 0 days 00:00:28.218210 | 0 days 00:00:28.315160 | 0 days 00:00:28.412110 | 0 days 00:00:28.412110 | 0 days 00:00:28.412110 |

| N18A | 3 | 0 days 00:00:34.095483333 | 0 days 00:00:00.503778524 | 0 days 00:00:33.513770 | 0 days 00:00:33.950055 | 0 days 00:00:34.386340 | 0 days 00:00:34.386340 | 0 days 00:00:34.386340 |

| O15A | 5 | 0 days 00:00:24.222288400 | 0 days 00:00:00.158953507 | 0 days 00:00:24.049170 | 0 days 00:00:24.049170 | 0 days 00:00:24.314742 | 0 days 00:00:24.335500 | 0 days 00:00:24.362860 |

| O16A | 3 | 0 days 00:00:14.805791333 | 0 days 00:00:00.214392546 | 0 days 00:00:14.558760 | 0 days 00:00:14.737037 | 0 days 00:00:14.915314 | 0 days 00:00:14.929307 | 0 days 00:00:14.943300 |

| O18A | 5 | 0 days 00:00:23.389030200 | 0 days 00:00:00.425951696 | 0 days 00:00:22.925160 | 0 days 00:00:23.116750 | 0 days 00:00:23.224960 | 0 days 00:00:23.792720 | 0 days 00:00:23.885561 |

| P14A | 8 | 0 days 00:00:26.757380 | 0 days 00:00:00.369050089 | 0 days 00:00:26.272320 | 0 days 00:00:26.410845 | 0 days 00:00:26.791710 | 0 days 00:00:27.140000 | 0 days 00:00:27.143660 |

| P15A | 9 | 0 days 00:00:16.204747666 | 0 days 00:00:00.692561976 | 0 days 00:00:15.271110 | 0 days 00:00:15.920450 | 0 days 00:00:16.195300 | 0 days 00:00:16.614280 | 0 days 00:00:17.513850 |

| P16A | 11 | 0 days 00:00:07.927102 | 0 days 00:00:00.215584258 | 0 days 00:00:07.682190 | 0 days 00:00:07.795410 | 0 days 00:00:07.859270 | 0 days 00:00:08.034686 | 0 days 00:00:08.397620 |

| P17A | 6 | 0 days 00:00:07.174232666 | 0 days 00:00:00.289962934 | 0 days 00:00:06.905530 | 0 days 00:00:06.943467500 | 0 days 00:00:07.091821500 | 0 days 00:00:07.364075500 | 0 days 00:00:07.607380 |

| P18A | 11 | 0 days 00:00:14.736734454 | 0 days 00:00:00.293448302 | 0 days 00:00:14.258420 | 0 days 00:00:14.580390 | 0 days 00:00:14.690940 | 0 days 00:00:14.856656 | 0 days 00:00:15.265000 |

| Q14A | 6 | 0 days 00:00:30.549576666 | 0 days 00:00:00.335974466 | 0 days 00:00:30.212890 | 0 days 00:00:30.306100 | 0 days 00:00:30.498545 | 0 days 00:00:30.690990 | 0 days 00:00:31.090390 |

| Q15A | 7 | 0 days 00:00:18.810036142 | 0 days 00:00:00.297412245 | 0 days 00:00:18.382690 | 0 days 00:00:18.592790 | 0 days 00:00:18.931850 | 0 days 00:00:18.977141500 | 0 days 00:00:19.215850 |

| Q16A | 10 | 0 days 00:00:10.165477800 | 0 days 00:00:00.328986240 | 0 days 00:00:09.618000 | 0 days 00:00:10.006930 | 0 days 00:00:10.153105 | 0 days 00:00:10.248371250 | 0 days 00:00:10.690000 |

| Q18A | 11 | 0 days 00:00:17.135285636 | 0 days 00:00:00.256429698 | 0 days 00:00:16.894450 | 0 days 00:00:16.975725 | 0 days 00:00:17.010560 | 0 days 00:00:17.186767 | 0 days 00:00:17.665000 |

| Q19A | 3 | 0 days 00:00:29.604353333 | 0 days 00:00:00.383435634 | 0 days 00:00:29.161600 | 0 days 00:00:29.493665 | 0 days 00:00:29.825730 | 0 days 00:00:29.825730 | 0 days 00:00:29.825730 |

| R15A | 4 | 0 days 00:00:27.445802500 | 0 days 00:00:00.545923743 | 0 days 00:00:26.713610 | 0 days 00:00:27.186507500 | 0 days 00:00:27.603435 | 0 days 00:00:27.862730 | 0 days 00:00:27.862730 |

| R17A | 11 | 0 days 00:00:20.501155545 | 0 days 00:00:00.236867827 | 0 days 00:00:20.280000 | 0 days 00:00:20.351515 | 0 days 00:00:20.397810 | 0 days 00:00:20.510261 | 0 days 00:00:20.965000 |

| R18A | 8 | 0 days 00:00:27.290727500 | 0 days 00:00:00.298790493 | 0 days 00:00:26.900100 | 0 days 00:00:27.151197500 | 0 days 00:00:27.153725 | 0 days 00:00:27.449397500 | 0 days 00:00:27.732590 |

| SRU | 14 | 0 days 00:00:12.069393500 | 0 days 00:00:00.243053585 | 0 days 00:00:11.719920 | 0 days 00:00:11.960260250 | 0 days 00:00:12.020410 | 0 days 00:00:12.112279500 | 0 days 00:00:12.505000 |

In addition to events_to_df and picks_to_df, the following extractors are defined:

arrivals_to_dfextracts arrival information from an Origin object (or from the preferred origin of each event in a catalog)amplitudes_to_dfextracts amplitude informationstation_magnitudes_to_dfextracts station magnitude information from a catalog, event, or magnitudemagnitudes_to_dfextracts magnitude information

![Logo of ObsPlus [ 0.3.2.dev1+gbd439dc ]](../../_static/obsplus_panda.png)